Deep Seek is a game changer for making sustainable AI

This is showing the world that we can have a win for the planet, and advance AI

There’s probably many things you’ve heard about Deep Seek, but the part I’m most excited about is its implications on power consumption of AI. As artificial intelligence models grow more powerful, they're also becoming increasingly energy-hungry. But what if we could build powerful AI systems that use significantly less energy? That's where DeepSeek comes in.

The biggest difference is the architecture- it uses less energy to do the same things, and more

DeepSeek represents a fundamental rethinking of how AI systems are built. I really appreciated Rahul Sandils’s explanation:

"If traditional AI models are like power-hungry supercomputers, DeepSeek AI is more like a distributed network of smart, energy-efficient devices working together. It's not just about doing more with less; it's about doing it more intelligently."

Deep Seek emits orders of magnitude (~200x) less carbon, without compromising performance

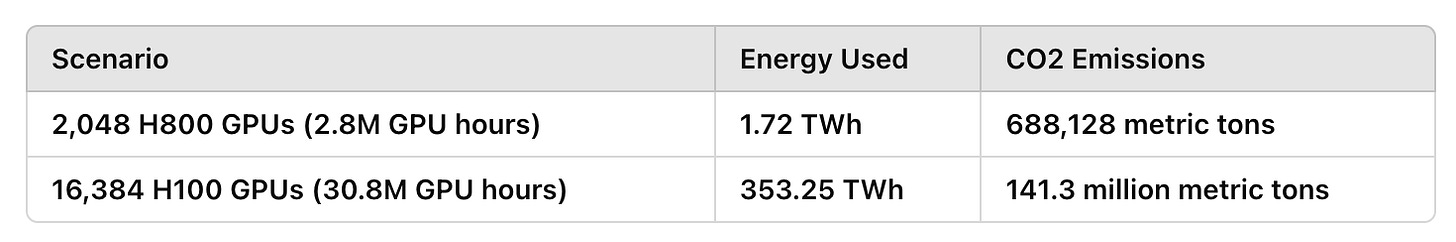

I don’t think there are any official reports that have come out, but according to one article, Deep Seek was trained on on 2,048 Nvidia H800 GPUs, using approximately 2.8 million GPU hours. To compare it, Meta's Llama 3, used 30.8 million GPU hours on 16,384 H100 GPUs.

I used chat GPT to figure out how much more efficient Deep seek is (and please let me know if you agree with the calculations- in the appendix section below). Here is the Summary Table:

Breaking Down the Efficiency Gains

To understand just how significant DeepSeek's efficiency improvements are, let's look at a comparison with another recent AI model, Meta's Llama 3:

DeepSeek's Training

Used 2,048 GPUs

Required 2.8 million GPU hours

Estimated carbon footprint: 688,128 metric tons of CO2

Llama 3's Training

Used 16,384 GPUs

Required 30.8 million GPU hours

Estimated carbon footprint: 141.3 million metric tons of CO2

Now if we put everything in perspective, the US EPA says that each home emits 7.27 metric tons of Co2 from electricity use alone. For our calculations, we will use the figure 6.1 metric tons to account for green sources of energy.

DeepSeek model training energy usage: About 112,800 average US homes

Llama 3 model training energy usage: About 23.2 million average US homes

Why This Matters

The implications of this breakthrough extend far beyond just saving energy. It demonstrates that:

Sustainability Doesn't Mean Compromise: DeepSeek shows we can achieve high performance while significantly reducing environmental impact.

Innovation Through Constraints: Sometimes, limitations can drive creative solutions. DeepSeek's architects used environmental and resource constraints as motivation to fundamentally rethink AI architecture.

Democratizing AI: Lower energy requirements mean more organizations and countries can participate in AI development, even with limited resources.

Looking Forward: The Global Impact

This breakthrough has particular significance for developing nations. Take India, for example: rather than viewing limited resources as a barrier, countries can follow DeepSeek's example and use these constraints to drive innovation in sustainable AI development.

More importantly, DeepSeek demonstrates that what once seemed impossible – dramatically reducing AI's carbon footprint without sacrificing performance – is actually achievable. This opens new possibilities for sustainable AI development worldwide.

My takeaway is that constraints can be a feature, and not a bug

I’ve said it many times but the reason I think India can be a leader in sustainable AI is the same reason that Deep Seek is so innovative; it used the geopolitical, environmental, and other constraints at the time to question first principles and do a fundamental redesign. Through this process, the team achieved breakthrough efficiency gains that could help make AI development more sustainable and accessible globally.

This isn't just about building better AI – it's about building smarter, more sustainable systems that can benefit everyone while protecting our planet.

Appendix

Summary Table:

Calcuations:

Case 1: 2,048 Nvidia H800 GPUs for 2.8 million GPU hours

Power Consumption of H800:

300W (0.3 kW) per GPU.

Total Power Consumption:

0.3 kW×2,048 GPUs=614.4 kW0.3 \, \text{kW} \times 2,048 \, \text{GPUs} = 614.4 \, \text{kW}0.3kW×2,048GPUs=614.4kW.

Total Energy Consumption:

614.4 kW×2,800,000 hours=1,720,320,000 kWh614.4 \, \text{kW} \times 2,800,000 \, \text{hours} = 1,720,320,000 \, \text{kWh}614.4kW×2,800,000hours=1,720,320,000kWh (1.72 TWh).

CO2 Emissions (Global Average):

Using 0.4 kg CO2/kWh, emissions =

1,720,320,000 kWh×0.4 kg CO2/kWh=688,128,000 kg CO21,720,320,000 \, \text{kWh} \times 0.4 \, \text{kg CO2/kWh} = 688,128,000 \, \text{kg CO2}1,720,320,000kWh×0.4kg CO2/kWh=688,128,000kg CO2.Total CO2 emissions = 688,128 metric tons of CO2.

Case 2: 16,384 Nvidia H100 GPUs for 30.8 million GPU hours

Power Consumption of H100:

700W (0.7 kW) per GPU.

Total Power Consumption:

0.7 kW×16,384 GPUs=11,468.8 kW0.7 \, \text{kW} \times 16,384 \, \text{GPUs} = 11,468.8 \, \text{kW}0.7kW×16,384GPUs=11,468.8kW.

Total Energy Consumption:

11,468.8 kW×30,800,000 hours=353,254,400,000 kWh11,468.8 \, \text{kW} \times 30,800,000 \, \text{hours} = 353,254,400,000 \, \text{kWh}11,468.8kW×30,800,000hours=353,254,400,000kWh (353.25 GWh).

CO2 Emissions (Global Average):

Using 0.4 kg CO2/kWh, emissions =

353,254,400,000 kWh×0.4 kg CO2/kWh=141,301,760,000 kg CO2353,254,400,000 \, \text{kWh} \times 0.4 \, \text{kg CO2/kWh} = 141,301,760,000 \, \text{kg CO2}353,254,400,000kWh×0.4kg CO2/kWh=141,301,760,000kg CO2.Total CO2 Emissions= 141.3 million metric tons of CO2